Community Team Developmental Evaluation

To test the validity of a evolving process, program or service, like a new Community Team, Golden & Area A can make use of Developmental Evaluation (DE).

"Developmental Evaluation supports innovation development to guide adaptation to emergent and dynamic realities in complex environments. Innovations can take the form of new projects, programs, products, organizational changes, policy reforms, and system interventions. A complex system is characterized by a large number of interacting and interdependent elements in which there is no central control. Patterns of change emerge from rapid, real time interactions that generate learning, evolution, and development – if one is paying attention and knows how to observe and capture the important and emergent patterns. Complex environments for social interventions and innovations are those in which what to do to solve problems is uncertain and key stakeholders are in conflict about how to proceed."

- Patton (2010) Developmental Evaluation cited by BetterEvaluation.org

DE is ideal for the formation of Community Team because this program has not existed before and it's activities and outcomes are uncertain and emerging (The O'Halloran Group, 2015). A DE process can be crafted between the funders (local government), staff and Community Team members so that everyone gets what they need out of the process - or at least evaluate the evolving process fairly, comprehensively and openly. But it must be implemented at the beginning.

|

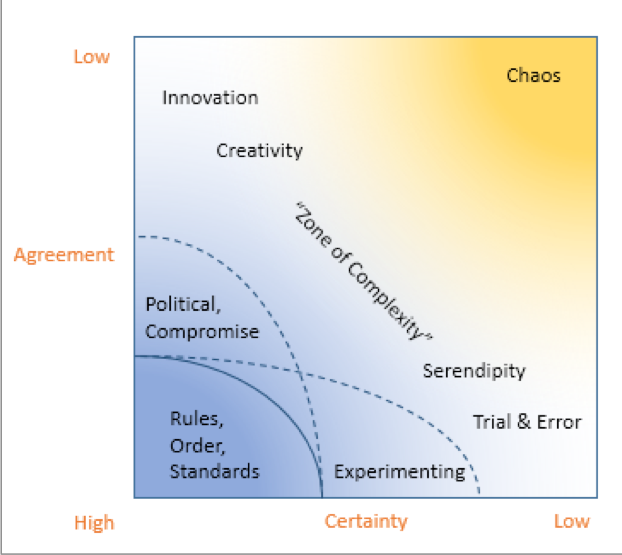

Figure: Complexity Matrix

|

Patton (2006), p.30; cited in BetterEvaluation.org

|

The Challenge

The challenge we are trying to address with the Community Team is the lack of collaborative prioritization, decision-making and resource allocation in Golden & Area A's vibrant community. As many stakeholders see this solution as 'far from agreement' and 'far from certainty,' DE will help us evaluate the systemic change to ensure collaborative prioritization, improved decision-making and resource-allocation, with the necessary community resources.

|

Image: Spark Policy Institute's Developmental Evaluation Resources.

|

Readiness

It's critical to determine if your initiative is ready for DE. Most things in a systems approach are interrelated. The key with DE is not getting stuck with the same end-goal in mind. DE provides space to adjust your goal, based on the realities

Identifying the primary focus - the initiative - then, diagnose, scope, adaptive design and adaptive implementation. These 4 quadrants are NOT sequential, but rather need to be addressed based on your challenge and progress, often re-iteratively.

Diagnosis

Evaluative culture

Developmental context

Adaptive leadership

Scoping

The Initiative theory

User profile

Design/methods

Budget options

Evaluator options

Adaptive Design

Debate & negotiate methods

Simulate methods and findings

Finalize design

Adaptive Implementation

Gather data

Present results

Produce Report

Follow up to ensure use

This supports a process of ongoing learning, which is vital to improving community capacity in such a way that fits the realities of the individual community and its priorities.

Identifying the primary focus - the initiative - then, diagnose, scope, adaptive design and adaptive implementation. These 4 quadrants are NOT sequential, but rather need to be addressed based on your challenge and progress, often re-iteratively.

Diagnosis

Evaluative culture

Developmental context

Adaptive leadership

Scoping

The Initiative theory

User profile

Design/methods

Budget options

Evaluator options

Adaptive Design

Debate & negotiate methods

Simulate methods and findings

Finalize design

Adaptive Implementation

Gather data

Present results

Produce Report

Follow up to ensure use

This supports a process of ongoing learning, which is vital to improving community capacity in such a way that fits the realities of the individual community and its priorities.

7 Points about DE from Casey Boodt of Innoweave

The following was found in Casey Boodt's Innoweave presentation on June 2, 2015.

1. Orientation. "DE is not a recipe," it is a good enough solution (Mark Cabaj). "It's a mindset of inquiry into how to bring data to bear on what's unfolding so as to guide and develop the unfolding." - Michael Quinn Patton. Developmental Evaluation. 2010: pp.75-6.

What that means and the timing of the inquiry will depend on the situation, context, the people involved, and the fundamental principle of what makes sense for program development.

2. Purpose. DE focuses on developmental questions: What's being developed? What information do people need to make decisions? How is what's being developed and what's emerging to be judged? Given what's been developed so far and what has emerged, what's next?

3. Evaluator Relationship. Traditional evaluation positions the evaluators outside the action to assure independence and objectivity. DE positions the evaluator as an integrated team member, integrated into the action and ongoing interpretation and decision-making processes.

4. Evaluator Role/Function. The Developmental Evaluator inquires into developments, tracks developments, facilitates interpretation of developments and their significance, and engages with innovators, change agents, program staff, participants in the process and funders around making judgements about what is being developed, how it is being developed, the consequences and impacts about what has been developed, and the next stages of development. - Michael Quinn Patton. Developmental Evaluation. 2010: pp.227.

The key is asking "why?" Start with "Surface issues" - only peel back the onion as far as necessary. Then, "Framing Concepts" - with a glossary of terms, understand consequences of tackling issues outside peoples' own 'frame.' Next, "Tests Quick Iterations" - test to see if there is a foundation for moving in that particular direction. Finally, "Tracking Developments" and share results with stakeholders. This makes it clearer for all stakeholders and subsequent iterations.

5. Evaluation Design. Contrary to the usual practice in evaluation of fixed designs that are implemented as planned, DE designs can change as the innovation unfolds and changes. (Michael Quinn Patton. Developmental Evaluation. 2010: pp. 335-6.) Evaluation design includes: 1) Mapping system dynamics & interdependencies (why do things work the way they do), 2) Identifying partner assumptions (and motivations), 3) Information needs of partners (to examine it and to initiate changes to the status quo), 4) Establishing decision points (It is critical to know when to quit), 5) Select meaningful indicators, 6) Creating space for co-learning. (Also see: Adopting a Lens of Complexity in Community Change by Mark Cabaj, which talks about the importance of 90-day experimentation campaigns.)

6. Reports. Dynamic complexities don't slow down or wait for evaluators to write their reports, get them edited, and then approved by higher authorities. Any method can be used but will have to be adapted to the necessities of speed, real-time reporting and just-in-time, in the moment decision-making (Michael Quinn Patton. Developmental Evaluation. 2010: pp.335-6.) This can be a 1-page summary or a 2-page newsletter or a monthly editorial. (Note: Engineers present an annual 'failures report.) This increases the public's awareness and may encourage politicos to take action or engage in the process. In DE, reporting is ongoing so as to affect collective learning.

7. Accountability. In traditional evaluation, accountability is determined after the fact, and is focused on and directed towards external stakeholders. In DE, accountability is real-time, centered on the program team's commitments to each other and their shared need to have a positive impact in an area of mutual concern.

1. Orientation. "DE is not a recipe," it is a good enough solution (Mark Cabaj). "It's a mindset of inquiry into how to bring data to bear on what's unfolding so as to guide and develop the unfolding." - Michael Quinn Patton. Developmental Evaluation. 2010: pp.75-6.

What that means and the timing of the inquiry will depend on the situation, context, the people involved, and the fundamental principle of what makes sense for program development.

2. Purpose. DE focuses on developmental questions: What's being developed? What information do people need to make decisions? How is what's being developed and what's emerging to be judged? Given what's been developed so far and what has emerged, what's next?

3. Evaluator Relationship. Traditional evaluation positions the evaluators outside the action to assure independence and objectivity. DE positions the evaluator as an integrated team member, integrated into the action and ongoing interpretation and decision-making processes.

4. Evaluator Role/Function. The Developmental Evaluator inquires into developments, tracks developments, facilitates interpretation of developments and their significance, and engages with innovators, change agents, program staff, participants in the process and funders around making judgements about what is being developed, how it is being developed, the consequences and impacts about what has been developed, and the next stages of development. - Michael Quinn Patton. Developmental Evaluation. 2010: pp.227.

The key is asking "why?" Start with "Surface issues" - only peel back the onion as far as necessary. Then, "Framing Concepts" - with a glossary of terms, understand consequences of tackling issues outside peoples' own 'frame.' Next, "Tests Quick Iterations" - test to see if there is a foundation for moving in that particular direction. Finally, "Tracking Developments" and share results with stakeholders. This makes it clearer for all stakeholders and subsequent iterations.

5. Evaluation Design. Contrary to the usual practice in evaluation of fixed designs that are implemented as planned, DE designs can change as the innovation unfolds and changes. (Michael Quinn Patton. Developmental Evaluation. 2010: pp. 335-6.) Evaluation design includes: 1) Mapping system dynamics & interdependencies (why do things work the way they do), 2) Identifying partner assumptions (and motivations), 3) Information needs of partners (to examine it and to initiate changes to the status quo), 4) Establishing decision points (It is critical to know when to quit), 5) Select meaningful indicators, 6) Creating space for co-learning. (Also see: Adopting a Lens of Complexity in Community Change by Mark Cabaj, which talks about the importance of 90-day experimentation campaigns.)

6. Reports. Dynamic complexities don't slow down or wait for evaluators to write their reports, get them edited, and then approved by higher authorities. Any method can be used but will have to be adapted to the necessities of speed, real-time reporting and just-in-time, in the moment decision-making (Michael Quinn Patton. Developmental Evaluation. 2010: pp.335-6.) This can be a 1-page summary or a 2-page newsletter or a monthly editorial. (Note: Engineers present an annual 'failures report.) This increases the public's awareness and may encourage politicos to take action or engage in the process. In DE, reporting is ongoing so as to affect collective learning.

7. Accountability. In traditional evaluation, accountability is determined after the fact, and is focused on and directed towards external stakeholders. In DE, accountability is real-time, centered on the program team's commitments to each other and their shared need to have a positive impact in an area of mutual concern.

Fit

There is no 'generic DE model.' In determining the best fitting DE model, it is important to understand and identify your approach.

And how can your information fit the users' model?

We have not been engaging for 'engagement's sake.' We have been engaging for the purpose of the end goal.

And how can your information fit the users' model?

We have not been engaging for 'engagement's sake.' We have been engaging for the purpose of the end goal.

What is the challenge that the Community Team can solve?

What is the challenge we are trying to address?

The Community Team addresses the challenge of having 2 local governments and 100 non profits serving the 6,766 residents of Golden & CSRD Area A without understanding 1) what the key issues are and 2) how they can address them individually or 3) collectively.

Why is the status quo unacceptable?

The status quo is unacceptable because the community of Golden & Area A lacks the space to create a shared vision. Without a shared vision, decisions and activities of each separate local government area will likely not be optimized and may actually work against each other. This shared vision and stewardship group (known as a Community Team) would aid in issue prioritization, community alignment, community decision-making and public resource allocation.

What are the characteristics of your emerging strategy to address it?

The emerging strategy utilizes representatives from local government and supported leaders from groups of local non profit organizations to 1) lead the shared vision process (Integrated Community Sustainability Plan or ICSP), and 2) empowers them to collaborate in the short and long-term to maximize the allocation and impact of limited community resources (money, people, time) to address community priorities. This is a complex problem if there ever was one - utilizing innovation, creativity, serendipity and trial & error.

The emerging strategy has been to show elected officials, non profits leaders and key volunteers and staff understand the disconnection between resources and addressing needs. However, this model does not exist in very many other communities - and not to this degree of control - which makes the idea of re-delegation of power very scary for some.

How do you understand the connection between your activities and the outcome you seek?

The activities at the Community Team prototyping stage need to support and encourage the building of trust in local sub-sector leaders (for example, Health & Social Services Orgs; Arts, Culture & Heritage Groups; Sports Groups, etc.) with elected officials and their key staff.

As an initiative that can benefit from DE, it is critical that the Community Team stakeholders to understand that the principles of DE encourage a reiterative process, similar to Wheatley & Frieze's Lifecycle of Emergence. Through DE, we may learn that the Community Team may not be the best fit for now, or 6 months from now. (Or a Community Team format may be embraced for it's transparency and last for several years before evolving into the next 'community development model.')

As people get more comfortable with the inevitability of change, they will be more prepared to move and even lead it.

The Community Team addresses the challenge of having 2 local governments and 100 non profits serving the 6,766 residents of Golden & CSRD Area A without understanding 1) what the key issues are and 2) how they can address them individually or 3) collectively.

Why is the status quo unacceptable?

The status quo is unacceptable because the community of Golden & Area A lacks the space to create a shared vision. Without a shared vision, decisions and activities of each separate local government area will likely not be optimized and may actually work against each other. This shared vision and stewardship group (known as a Community Team) would aid in issue prioritization, community alignment, community decision-making and public resource allocation.

What are the characteristics of your emerging strategy to address it?

The emerging strategy utilizes representatives from local government and supported leaders from groups of local non profit organizations to 1) lead the shared vision process (Integrated Community Sustainability Plan or ICSP), and 2) empowers them to collaborate in the short and long-term to maximize the allocation and impact of limited community resources (money, people, time) to address community priorities. This is a complex problem if there ever was one - utilizing innovation, creativity, serendipity and trial & error.

The emerging strategy has been to show elected officials, non profits leaders and key volunteers and staff understand the disconnection between resources and addressing needs. However, this model does not exist in very many other communities - and not to this degree of control - which makes the idea of re-delegation of power very scary for some.

How do you understand the connection between your activities and the outcome you seek?

The activities at the Community Team prototyping stage need to support and encourage the building of trust in local sub-sector leaders (for example, Health & Social Services Orgs; Arts, Culture & Heritage Groups; Sports Groups, etc.) with elected officials and their key staff.

As an initiative that can benefit from DE, it is critical that the Community Team stakeholders to understand that the principles of DE encourage a reiterative process, similar to Wheatley & Frieze's Lifecycle of Emergence. Through DE, we may learn that the Community Team may not be the best fit for now, or 6 months from now. (Or a Community Team format may be embraced for it's transparency and last for several years before evolving into the next 'community development model.')

As people get more comfortable with the inevitability of change, they will be more prepared to move and even lead it.

Over time, we will be able to identify gaps and overlap between entities. As well as maximize utilization of resources between similar community groups.

But first, since this is a complex system that is always in flux, let's take some bite-sized chunks.

But first, since this is a complex system that is always in flux, let's take some bite-sized chunks.

Comparing DE and Traditional Evaluation

DE informs the evolution of a group's program theory and group's theory of change - from little refinements to radical changes - which become the progress markers of DE.

Developmental Evaluator Competencies

Aware of the dynamic nature of innovation and complexity. High tolerance for adaptive processes and comfort with ambiguity. Strong interpersonal skills to deal with hyperactive, short-attention-span, action-oriented innovators. Able to easily draw on a larger tool kit of evaluative methods. Skilled at real time/flexible and adaptive evaluation design and methods. Facilitation, listening and partner management skills. Also, the DEvaluator should know when to use different consultation formats (online surveys v. interviews).

DE informs the evolution of a group's program theory and group's theory of change - from little refinements to radical changes - which become the progress markers of DE.

Developmental Evaluator Competencies

Aware of the dynamic nature of innovation and complexity. High tolerance for adaptive processes and comfort with ambiguity. Strong interpersonal skills to deal with hyperactive, short-attention-span, action-oriented innovators. Able to easily draw on a larger tool kit of evaluative methods. Skilled at real time/flexible and adaptive evaluation design and methods. Facilitation, listening and partner management skills. Also, the DEvaluator should know when to use different consultation formats (online surveys v. interviews).

Cluster 1: Diagnosis

Success depends on the evaluative culture. How prominently is learning a part of your culture?

There are several niches or contexts for evaluation: developmental, formative, and summative.

There are several sub-niches (5) for DE.

Where organizations or groups have multiple contexts operating at the same time, Patch Evaluation might be necessary.

Developmental contexts and DE require stewardship or adaptive leadership in order to be useful.

The "perfect" conditions for DE are likely to be imperfect for other types of evaluation.

There are several niches or contexts for evaluation: developmental, formative, and summative.

There are several sub-niches (5) for DE.

Where organizations or groups have multiple contexts operating at the same time, Patch Evaluation might be necessary.

Developmental contexts and DE require stewardship or adaptive leadership in order to be useful.

The "perfect" conditions for DE are likely to be imperfect for other types of evaluation.

Task 1: Assessing Evaluation Culture

Self-Reflection & Examination. Deliberately seeks evidence on what is emerging. Uses date to challenge what it is doing. Values candor, challenge and genuine dialogue.

Evidence-Based Learning. Makes time to learn in a structured fashion. Learns from mistakes and weak performance (which may be difficult for some people or situations). Encourages knowledge sharing.

Task 2: Assessing Context & Purpose of DE

Developmental

Initiative is innovating and in development.

Evaluation is used to provide feedback on the creation of the initiative.

Formative

Initiative is stabilizing and is being refined.

Evaluation is used to help improve the initiative.

Summative

Initiative is stabilized.

Some DE Niches

Preformative: The initiative is being created through a process of trial-and-error.

Model Replication: The initiative model is being adopted and adapted for a new context.

Ongoing Development: The initiative is constantly adapted to fit an ever-changing context.

Crisis: The initiative is an emergency response to a crisis situation.

Cross-Scale Complexity: The initiative is designed to change "systems" at multiple levels and sites.

Patch Evaluation

Some contexts require multiple evaluations to help with developmental, formative and summative decisions.

Task 3: Assessing Stewardship & Adaptive Leadership

The knowledge that innovation is an ongoing process of experimentation, learning and adaptation.

The ability to navigate and improvise on a shifting landscape, en route to a goal, and be open to the possibility that the goal may change in response to new learnings and opportunities.

Cooperation is always contingent and needs to be continually reinforced.

Play to your group's individuals' strengths. There is likely an opportunity and need to use each of their strengths; both traditional (management & leadership) and stewardship (adaptive leadership) approaches.

Self-Reflection & Examination. Deliberately seeks evidence on what is emerging. Uses date to challenge what it is doing. Values candor, challenge and genuine dialogue.

Evidence-Based Learning. Makes time to learn in a structured fashion. Learns from mistakes and weak performance (which may be difficult for some people or situations). Encourages knowledge sharing.

Task 2: Assessing Context & Purpose of DE

Developmental

Initiative is innovating and in development.

Evaluation is used to provide feedback on the creation of the initiative.

Formative

Initiative is stabilizing and is being refined.

Evaluation is used to help improve the initiative.

Summative

Initiative is stabilized.

Some DE Niches

Preformative: The initiative is being created through a process of trial-and-error.

Model Replication: The initiative model is being adopted and adapted for a new context.

Ongoing Development: The initiative is constantly adapted to fit an ever-changing context.

Crisis: The initiative is an emergency response to a crisis situation.

Cross-Scale Complexity: The initiative is designed to change "systems" at multiple levels and sites.

Patch Evaluation

Some contexts require multiple evaluations to help with developmental, formative and summative decisions.

Task 3: Assessing Stewardship & Adaptive Leadership

The knowledge that innovation is an ongoing process of experimentation, learning and adaptation.

The ability to navigate and improvise on a shifting landscape, en route to a goal, and be open to the possibility that the goal may change in response to new learnings and opportunities.

Cooperation is always contingent and needs to be continually reinforced.

Play to your group's individuals' strengths. There is likely an opportunity and need to use each of their strengths; both traditional (management & leadership) and stewardship (adaptive leadership) approaches.

Assess Readiness

1. How would you assess your culture, context and leadership? Are the conditions right for DE? Which aspects: "are in need of attention," "can proceed with caution," "are good to go."

2. What new questions emerge?

3. What do you think DE might be appropriate (or not...)?

2. What new questions emerge?

3. What do you think DE might be appropriate (or not...)?

1.1 Culture: "are in need of attention" - We have a culture of silos with occasional coordination. It is not natural to openly evaluate each other.

1.2 Context: "are good to go" - Those participants that have been on the Community Team journey, have a good understanding of context, but have not been asked to push it further. Maybe it's time to do that.

1.3 Leadership: "are in need of attention" - Our leaders are staff and keep their cards close to their chest. Our politicians are facilitators and not use to leading a broad, collaborative initiative - and have actually been reprimanded in the past for overstepping their "water, sewer, roads" mandate.

2. The questions that emerge are:

What do the key decision-makers need to know?

What type of public funds can non-elected Committees allocate?

What power is given up with this model?

Where are the weak points to the model and to the new system?

DE indicators? Number of CT meetings (increase); number of Sector meetings (increase); resources allocated (increase); number of inter-organizational projects (increase); number of inter-sector projects (increase).

3.DE is appropriate for the goal of measuring a Community Team, but that CT concept still needs to be understood. Generate evidence small successes of collaborative decision-making (eg. Health & Social Services Organizations Alignment Project, Trails Meeting with CBT, Youth Coalition, Age Friendly Community Plan & Committee, etc.). Show how working together works v. how silo activities work.

When developing our theory of change:

What are the goals of CT? (3 - 4)

What things contribute to our access to services?

Evaluation is about about "being adaptive" and it doesn't take just one model. Good teams will be able to adapt a model to their purposes.

1.2 Context: "are good to go" - Those participants that have been on the Community Team journey, have a good understanding of context, but have not been asked to push it further. Maybe it's time to do that.

1.3 Leadership: "are in need of attention" - Our leaders are staff and keep their cards close to their chest. Our politicians are facilitators and not use to leading a broad, collaborative initiative - and have actually been reprimanded in the past for overstepping their "water, sewer, roads" mandate.

2. The questions that emerge are:

What do the key decision-makers need to know?

What type of public funds can non-elected Committees allocate?

What power is given up with this model?

Where are the weak points to the model and to the new system?

DE indicators? Number of CT meetings (increase); number of Sector meetings (increase); resources allocated (increase); number of inter-organizational projects (increase); number of inter-sector projects (increase).

3.DE is appropriate for the goal of measuring a Community Team, but that CT concept still needs to be understood. Generate evidence small successes of collaborative decision-making (eg. Health & Social Services Organizations Alignment Project, Trails Meeting with CBT, Youth Coalition, Age Friendly Community Plan & Committee, etc.). Show how working together works v. how silo activities work.

When developing our theory of change:

What are the goals of CT? (3 - 4)

What things contribute to our access to services?

Evaluation is about about "being adaptive" and it doesn't take just one model. Good teams will be able to adapt a model to their purposes.

Cluster 2: Scoping

Theory of Change

User Profile

Design methods

Evaluator

User Profile

Design methods

Evaluator

Highlights

1. Evaluation

2.

3.

Some different ways to conceptualize the theory of your initiative:

theory of change, logical framework, program logic, program model, intervention logic, intervention model, chain of objectives, outcomes map, impact, pathway analysis, program theory, theory of action, working hypothesis, hypothesis of record.

1. Evaluation

2.

3.

Some different ways to conceptualize the theory of your initiative:

theory of change, logical framework, program logic, program model, intervention logic, intervention model, chain of objectives, outcomes map, impact, pathway analysis, program theory, theory of action, working hypothesis, hypothesis of record.

Develop a Logic/Theory of an Initiative

From To

Implicit and not documented --> Explicit and documented.

Fragmented amongst stakeholders --> Shared amongst stakeholders

Unevenly plausible, do-able and testable --> Increasingly plausible, do-able and testable.

Developing a theory of change or working hypothesis is a dynamic (and often messy) process that occurs over time through board-room discussions, on the ground experimentation, and frequent checking in, debates and adjustment based on new learnings, shift in context and arrival of new actors.

From To

Implicit and not documented --> Explicit and documented.

Fragmented amongst stakeholders --> Shared amongst stakeholders

Unevenly plausible, do-able and testable --> Increasingly plausible, do-able and testable.

Developing a theory of change or working hypothesis is a dynamic (and often messy) process that occurs over time through board-room discussions, on the ground experimentation, and frequent checking in, debates and adjustment based on new learnings, shift in context and arrival of new actors.

What is a Theory of Change?

An approach to thinking critically about what is required to bring about a desired social change

A map - how a group of stakeholders expects to reach a commonly understood long-term goal

Based on your understanding of the issue.

Why use a Theory of Change?

An approach to thinking critically about what is required to bring about a desired social change

A map - how a group of stakeholders expects to reach a commonly understood long-term goal

Based on your understanding of the issue.

Why use a Theory of Change?

- Big picture understanding of an issue and our understanding of what is required to support change process

- Helps stakeholders see where their specific initiatives fit into the "big picture" (can help them align their work)

- Shows where a specific initiative needs to link to others in order to achieve the desired impact

- Helps identify indicators for evaluation

How do you conceptualize your initiative's logic/theory?

1. Start with the Life Cycle of Emergence, first in general and specific to the GAI/CED opportunity

2. Then showed clusters/coalitions from the Constellation Model

3. Next, add all coalitions and supporting resources into one big Constellation Model

4. Now, need to show potential/draft Goals of the Community Team and supporting developmental evaluation metrics feeding into them. (Use the GECDC Terms of Reference as an example.)

What outstanding questions do you have about the way you conceptualize your initiative:

Is it alright to go back and revise a previous model or does that just encourage stakeholders to sit back, say "send it back" and wait until it's "perfect"? How many iterations of models should one expect to need? How do you know when you've found the right model?

Next Steps:

Document successes

Note the challenge (openly) - there are no resources for this group to meet and there appears to be little political will to change that

1. Start with the Life Cycle of Emergence, first in general and specific to the GAI/CED opportunity

2. Then showed clusters/coalitions from the Constellation Model

3. Next, add all coalitions and supporting resources into one big Constellation Model

4. Now, need to show potential/draft Goals of the Community Team and supporting developmental evaluation metrics feeding into them. (Use the GECDC Terms of Reference as an example.)

What outstanding questions do you have about the way you conceptualize your initiative:

Is it alright to go back and revise a previous model or does that just encourage stakeholders to sit back, say "send it back" and wait until it's "perfect"? How many iterations of models should one expect to need? How do you know when you've found the right model?

Next Steps:

Document successes

Note the challenge (openly) - there are no resources for this group to meet and there appears to be little political will to change that

Highlights:

1. One of the biggest reasons that evaluations are not as much as they could is because people design and implement "cookie cutter" designs.

2. Evaluations have different "users" who want different questions answered in order to make different kinds of decisions (aka "users").

3. You can improve the probabilities that an evaluation's "users" will "use" the evaluative data if you develop a user profile and use it to shape your evaluation.

There are great resources from the Aspen Institute and GrantCraft.

1. One of the biggest reasons that evaluations are not as much as they could is because people design and implement "cookie cutter" designs.

2. Evaluations have different "users" who want different questions answered in order to make different kinds of decisions (aka "users").

3. You can improve the probabilities that an evaluation's "users" will "use" the evaluative data if you develop a user profile and use it to shape your evaluation.

There are great resources from the Aspen Institute and GrantCraft.

Mapping Information Flows

Issue - surrounded by many Stakeholders

How do we all understand the situation?

Who needs what information, when?

What information will secure everyone's ongoing cooperation?

How will people contribute to data collection?

How will we learn together?

How will we know if we are successful?

This is the beginning of the Communications Strategy. (Remember that they are going to have a certain way they expect to receive the information.)

Differentiate between Partners ("Program Development Team," who need to make decisions that will affect the initiative) & Secondary Users (Community Team Reps or Sector Group Reps: those that want to be kept in the loop).

How do we all understand the situation?

Who needs what information, when?

What information will secure everyone's ongoing cooperation?

How will people contribute to data collection?

How will we learn together?

How will we know if we are successful?

This is the beginning of the Communications Strategy. (Remember that they are going to have a certain way they expect to receive the information.)

Differentiate between Partners ("Program Development Team," who need to make decisions that will affect the initiative) & Secondary Users (Community Team Reps or Sector Group Reps: those that want to be kept in the loop).

Canadian Evaluation Standards

DE needs to meet these 5 Canadian Evaluation Standards (CES) standards:

1. Utility – does the evaluation generate useful data for the users? (Don't ask unless it effects your decision-making.)

2. Feasibility – is the evaluation design efficient and do’able with the financial, technical and timing constraints?

3. Proprietary – is the evaluation done in a way that is proper, fair, legal, right and just?

4. Accuracy – to what extent are the evaluation representations, propositions, and findings, especially those that support interpretations and judgments about quality, truthful and dependable?

5. Accountable – to what extent are evaluators and evaluator users focusing on documenting and improving the evaluation processes?

(CES, 2015).

1. Utility – does the evaluation generate useful data for the users? (Don't ask unless it effects your decision-making.)

2. Feasibility – is the evaluation design efficient and do’able with the financial, technical and timing constraints?

3. Proprietary – is the evaluation done in a way that is proper, fair, legal, right and just?

4. Accuracy – to what extent are the evaluation representations, propositions, and findings, especially those that support interpretations and judgments about quality, truthful and dependable?

5. Accountable – to what extent are evaluators and evaluator users focusing on documenting and improving the evaluation processes?

(CES, 2015).

Innoweave Support

After participating in their national workshops and making a formal application, Innoweave can provide up to $30,000 to help hire a coach to implement Developmental Evaluation. The deadline is July 2, 2015.